https://labs.play-with-k8s.com/p/d2rvh8gi715g00ev3k2g#d2rvh8gi_d2rvhjgi715g00ev3k3g

1、环境

准备三台机器

1、192.168.0.18(master)

2、192.168.0.17(worker)

3、192.168.0.16(worker)

这三台机器都需要提前安装docker

关闭防火墙

# 临时关闭

systemctl stop firewalld

# 永久关闭

systemctl disable firewalld

# 确认防火墙状态

systemctl status firewalld # 应显示inactive

禁用SELinux

# 临时禁用

setenforce 0

# 永久禁用

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

# 确认状态

getenforce # 应返回Permissive

关闭Swap分区

# 临时关闭

swapoff -a

# 永久关闭(注释掉swap行)

sed -i 's/.*swap.*/#&/' /etc/fstab

# 确认Swap已关闭

free -m # Swap行应全部为0

配置内核参数

# 配置K8s所需内核参数

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

EOF

# 加载内核参数

sysctl --system

# 加载br_netfilter模块

modprobe br_netfilter

# 确认模块已加载

lsmod | grep br_netfilter

配置时间同步

# 安装chrony

yum install -y chrony

# 启动并设置开机自启

systemctl enable chronyd && systemctl start chronyd

# 确认时间同步状态

chronyc sources

2、安装

2.1 配置K8s阿里云源

三台机器都需要配置

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

2.2 安装kubeadm、kubelet和kubectl

三台机器都需要配置

# 安装指定版本(1.24.x是当前稳定版)

yum install -y kubelet-1.24.10-0 kubeadm-1.24.10-0 kubectl-1.24.10-0 --disableexcludes=kubernetes

# 启动kubelet并设置开机自启

systemctl enable kubelet && systemctl start kubelet

# 确认版本

kubeadm version

kubectl version --client

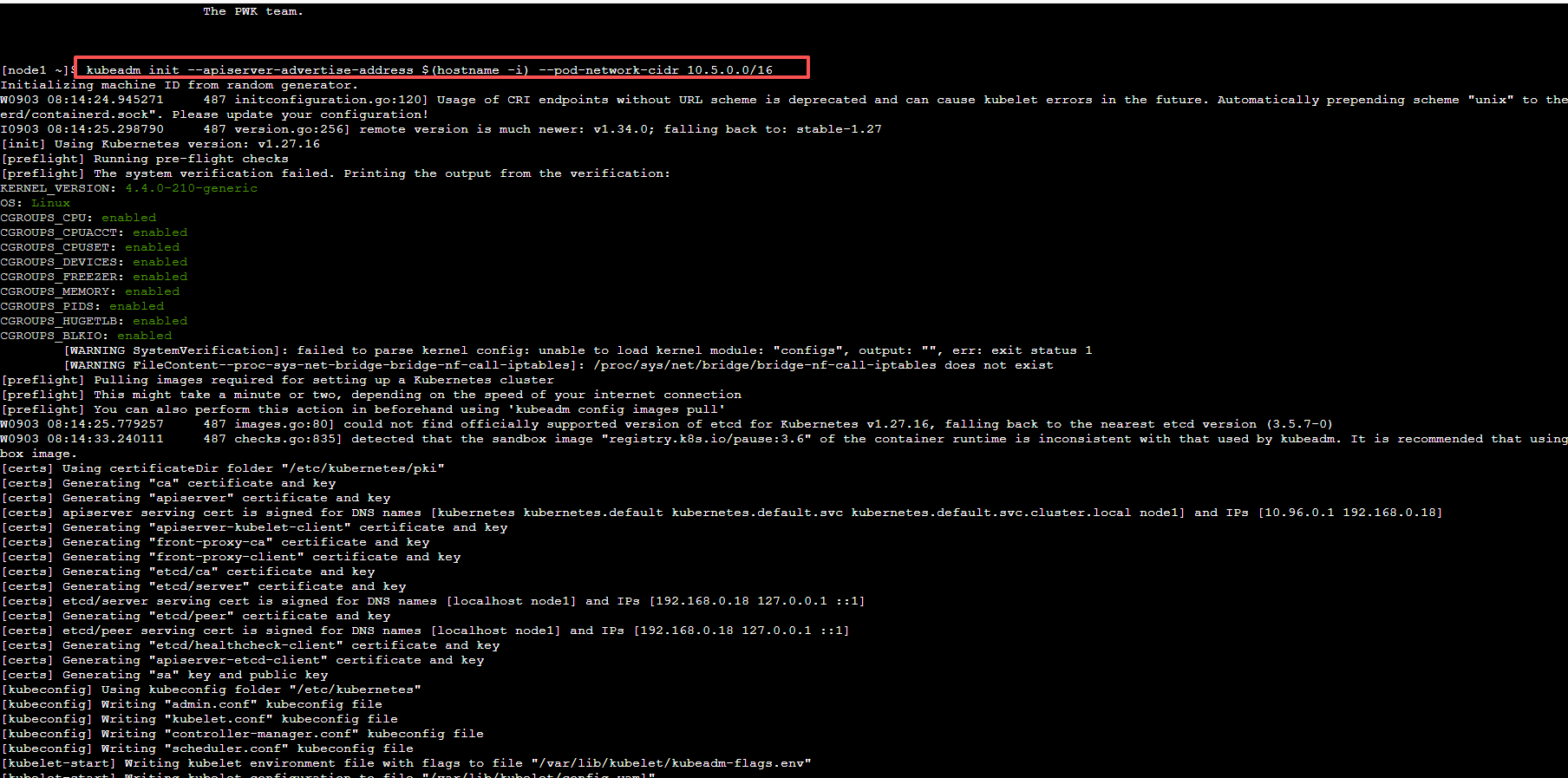

3、部署Kubernetes Master节点

3.1 初始化Master节点

# 初始化集群(使用阿里云镜像仓库)

kubeadm init \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.24.10 \

--apiserver-advertise-address=192.168.19.58 \

--pod-network-cidr=10.5.0.0/16 \

--service-cidr=10.96.0.0/12 \

--token-ttl 0

参数说明:

–image-repository:指定镜像仓库(国内使用阿里云镜像)

–kubernetes-version:指定K8s版本

–apiserver-advertise-address:Master节点IP

–pod-network-cidr:Pod网络CIDR范围(需与网络插件一致)

–service-cidr:Service网络CIDR范围

–token-ttl 0:token永不过期

红框1:表明初始化成功

红框2:下面执行

红框3:加入集群的token命令,其他worker机器,加入k8s的命令,要记住,如果忘记了,使用下面命令重新生成

kubeadm token create --print-join-commandkubeadm join 192.168.0.18:6443 --token v0vnjl.gh8pv1t1fr8mopw3 \

--discovery-token-ca-cert-hash sha256:d4e209ffbdced9034a12fbbea6959bce11b6f827cb01ca7eb00575742473f5553.2 配置kubectl

# 配置kubectl

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 测试kubectl配置

kubectl get nodes # 应显示master节点,状态为NotReady

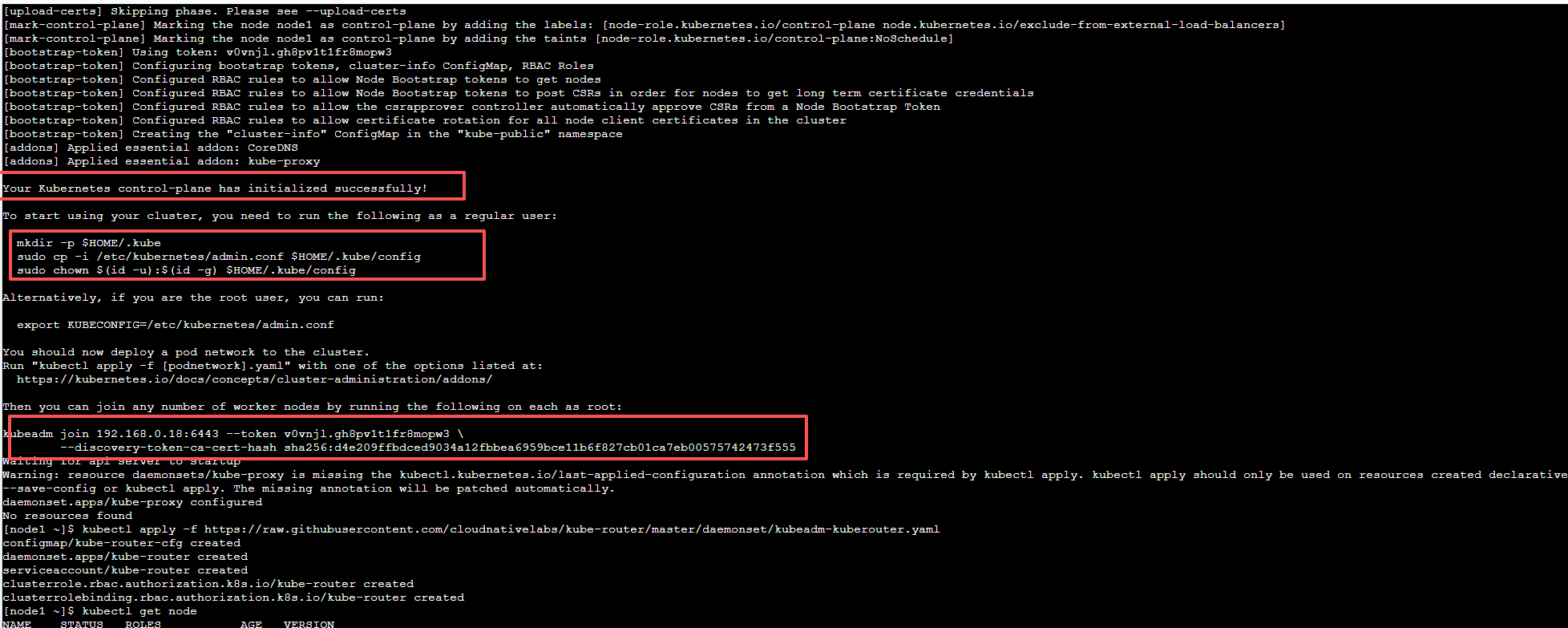

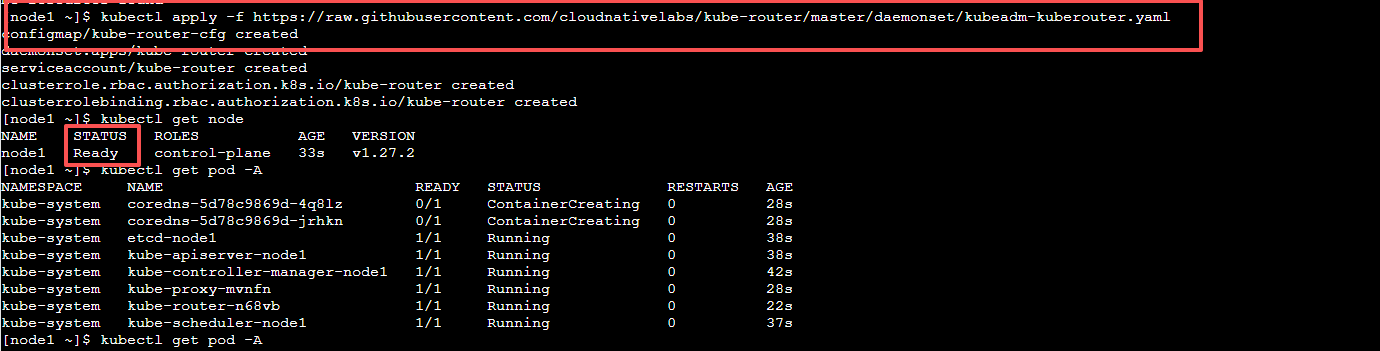

3.3 安装网络插件

1、方式1

kubectl apply -f https://raw.githubusercontent.com/cloudnativelabs/kube-router/master/daemonset/kubeadm-kuberouter.yaml

安装成功之后,节点状态变成ready,否则是noready

kubeadm-kuberouter.yaml内容

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-router-cfg

namespace: kube-system

labels:

tier: node

k8s-app: kube-router

data:

cni-conf.json: |

{

"cniVersion":"0.3.0",

"name":"mynet",

"plugins":[

{

"name":"kubernetes",

"type":"bridge",

"bridge":"kube-bridge",

"isDefaultGateway":true,

"ipam":{

"type":"host-local"

}

}

]

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

k8s-app: kube-router

tier: node

name: kube-router

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: kube-router

tier: node

template:

metadata:

labels:

k8s-app: kube-router

tier: node

spec:

priorityClassName: system-node-critical

serviceAccountName: kube-router

serviceAccount: kube-router

containers:

- name: kube-router

image: docker.io/cloudnativelabs/kube-router

imagePullPolicy: Always

args:

- --run-router=true

- --run-firewall=true

- --run-service-proxy=false

- --bgp-graceful-restart=true

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: KUBE_ROUTER_CNI_CONF_FILE

value: /etc/cni/net.d/10-kuberouter.conflist

livenessProbe:

httpGet:

path: /healthz

port: 20244

initialDelaySeconds: 10

periodSeconds: 3

resources:

requests:

cpu: 250m

memory: 250Mi

securityContext:

privileged: true

volumeMounts:

- name: lib-modules

mountPath: /lib/modules

readOnly: true

- name: cni-conf-dir

mountPath: /etc/cni/net.d

- name: kubeconfig

mountPath: /var/lib/kube-router/kubeconfig

readOnly: true

- name: xtables-lock

mountPath: /run/xtables.lock

readOnly: false

initContainers:

- name: install-cni

image: docker.io/cloudnativelabs/kube-router

imagePullPolicy: Always

command:

- /bin/sh

- -c

- set -e -x;

if [ ! -f /etc/cni/net.d/10-kuberouter.conflist ]; then

if [ -f /etc/cni/net.d/*.conf ]; then

rm -f /etc/cni/net.d/*.conf;

fi;

TMP=/etc/cni/net.d/.tmp-kuberouter-cfg;

cp /etc/kube-router/cni-conf.json ${TMP};

mv ${TMP} /etc/cni/net.d/10-kuberouter.conflist;

fi;

if [ -x /usr/local/bin/cni-install ]; then

/usr/local/bin/cni-install;

fi;

volumeMounts:

- mountPath: /etc/cni/net.d

name: cni-conf-dir

- mountPath: /etc/kube-router

name: kube-router-cfg

- name: host-opt

mountPath: /opt

hostNetwork: true

hostPID: true

tolerations:

- effect: NoSchedule

operator: Exists

- key: CriticalAddonsOnly

operator: Exists

- effect: NoExecute

operator: Exists

volumes:

- name: lib-modules

hostPath:

path: /lib/modules

- name: cni-conf-dir

hostPath:

path: /etc/cni/net.d

- name: kube-router-cfg

configMap:

name: kube-router-cfg

- name: kubeconfig

hostPath:

path: /var/lib/kube-router/kubeconfig

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

- name: host-opt

hostPath:

path: /opt

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-router

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kube-router

namespace: kube-system

rules:

- apiGroups:

- ""

resources:

- namespaces

- pods

- services

- nodes

- endpoints

verbs:

- list

- get

- watch

- apiGroups:

- "networking.k8s.io"

resources:

- networkpolicies

verbs:

- list

- get

- watch

- apiGroups:

- extensions

resources:

- networkpolicies

verbs:

- get

- list

- watch

- apiGroups:

- "coordination.k8s.io"

resources:

- leases

verbs:

- get

- create

- update

- apiGroups:

- ""

resources:

- services/status

verbs:

- update

- apiGroups:

- "discovery.k8s.io"

resources:

- endpointslices

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kube-router

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-router

subjects:

- kind: ServiceAccount

name: kube-router

namespace: kube-system2、安装Calico网络插件(推荐)

# 下载Calico配置文件

wget https://docs.projectcalico.org/v3.23/manifests/calico.yaml --no-check-certificate

# 修改Pod网络CIDR(如果与初始化时不同)

# sed -i 's/192.168.0.0\/16/10.244.0.0\/16/g' calico.yaml

# 应用配置

kubectl apply -f calico.yaml

# 查看Calico Pod状态

kubectl get pods -n kube-system -l k8s-app=calico-node

calico.yaml 已上传云盘

3、安装Flannel网络插件(备选)

# 创建目录并下载配置文件

mkdir -p ~/k8s && cd ~/k8s

wget https://raw.githubusercontent.com/flannel-io/flannel/v0.17.0/Documentation/kube-flannel.yml --no-check-certificate

# 国内环境可使用以下地址

# wget https://kuboard.cn/install-script/flannel/v0.17.0/kube-flannel.yml

# 应用配置

kubectl apply -f kube-flannel.yml

# 查看Flannel Pod状态

kubectl get pods -n kube-system -l app=flannel

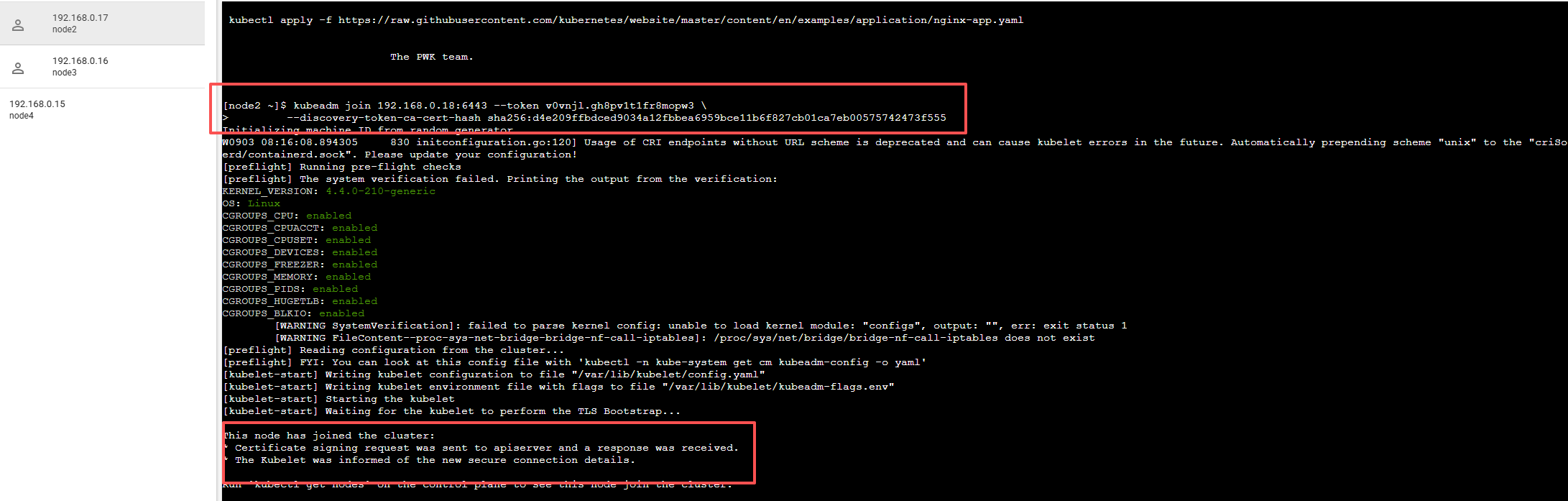

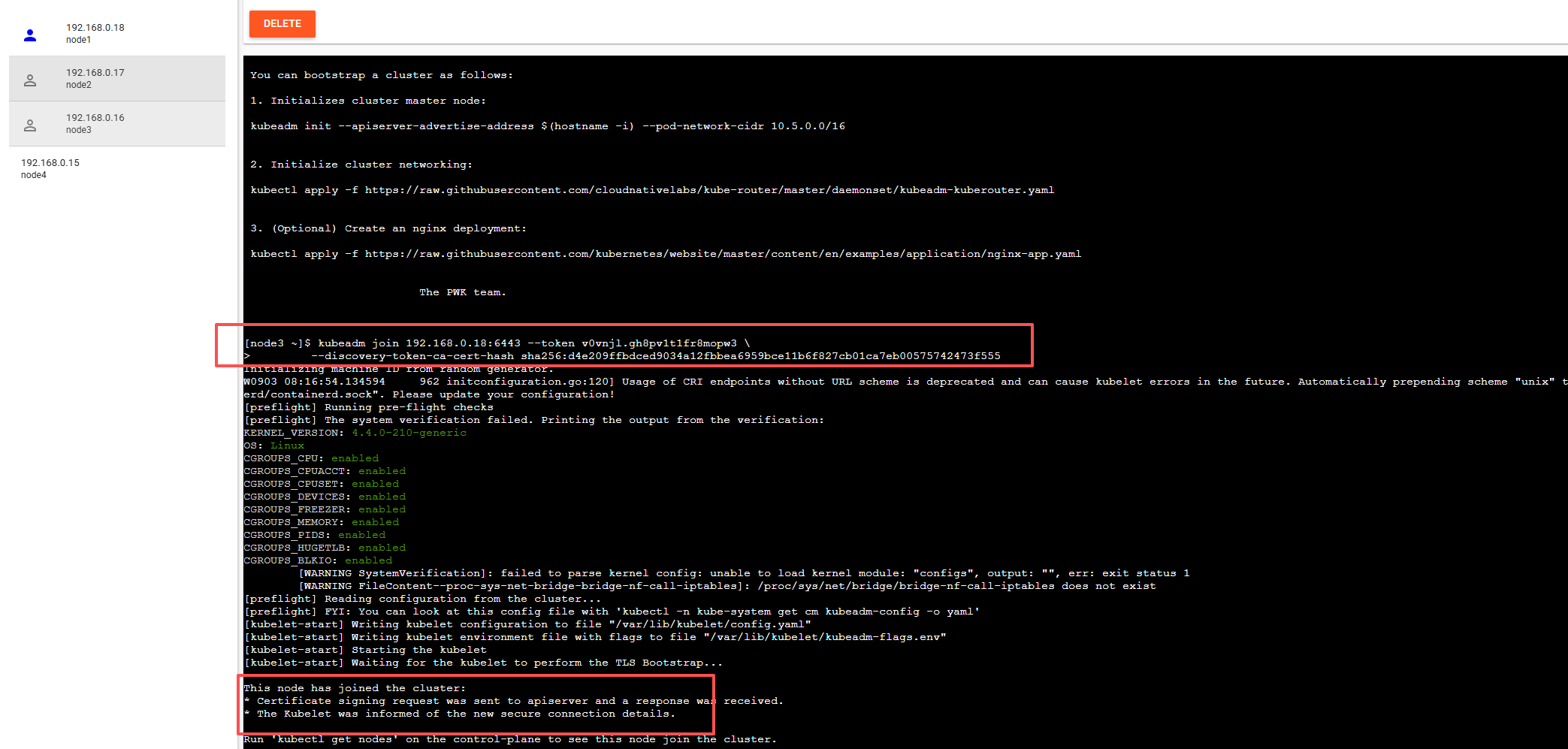

4、部署worker节点

其他woker节点,执行上面生成的命令即可

kubeadm join 192.168.0.18:6443 --token v0vnjl.gh8pv1t1fr8mopw3 \

--discovery-token-ca-cert-hash sha256:d4e209ffbdced9034a12fbbea6959bce11b6f827cb01ca7eb00575742473f555

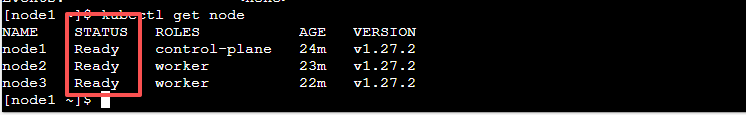

到master节点,查看node加入状态

kubectl get node

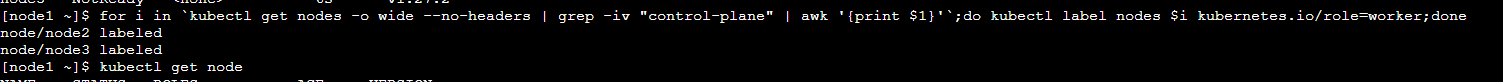

原本node节点的roles是<none>,可以执行下面命令,修改状态,方便查看

kubectl label nodes <node name> kubernetes.io/role=worker

或者for循环

for i in `kubectl get nodes -o wide --no-headers | grep -iv "control-plane" | awk '{print $1}'`;do kubectl label nodes $i kubernetes.io/role=worker;done

5、测试,实例化nginx做测试

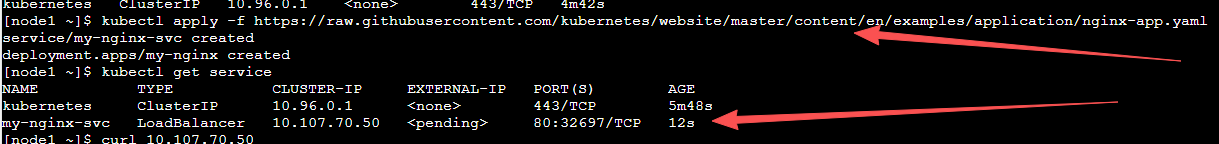

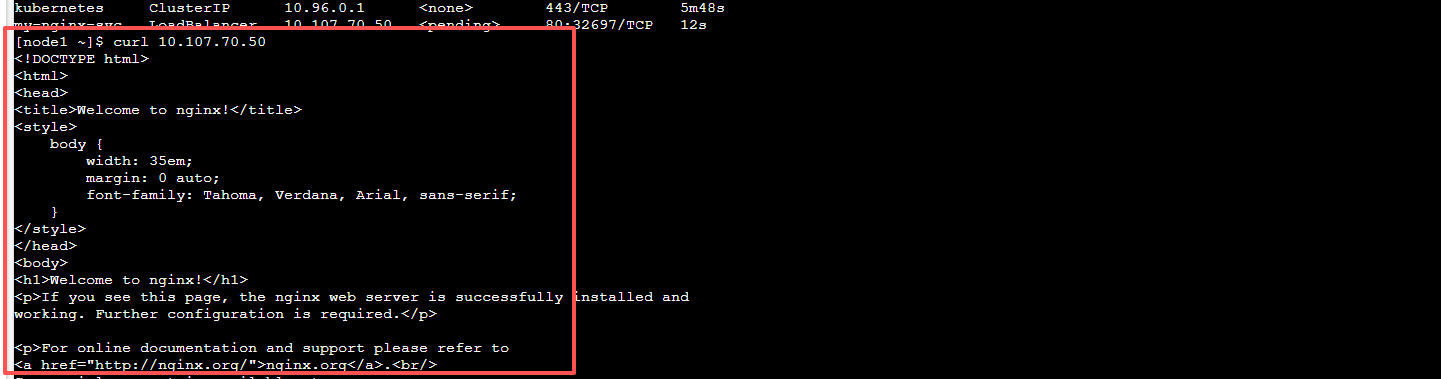

kubectl apply -f https://raw.githubusercontent.com/kubernetes/website/master/content/en/examples/application/nginx-app.yamlnginx-app.yaml内容

apiVersion: v1

kind: Service

metadata:

name: my-nginx-svc

labels:

app: nginx

spec:

type: LoadBalancer

ports:

- port: 80

selector:

app: nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

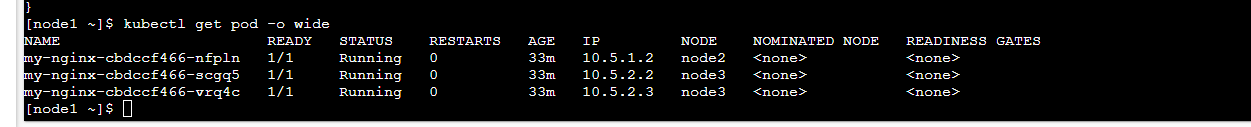

查看nginx运行状态

查看服务

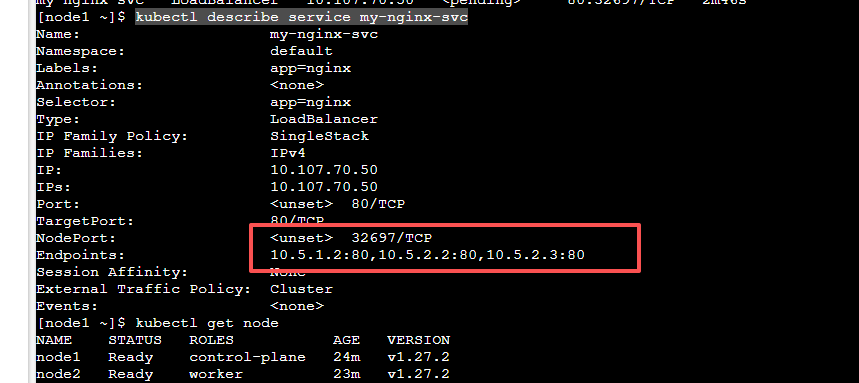

kubectl get service查看服务详情

kubectl describe service my-nginx-svc可以看到部署到了三台服务器中

v2